Effective Data Collection & Management for Research Assistants

In today’s research landscape, data collection is the backbone of valid, credible results—yet it’s also where most errors begin. Research assistants often juggle dozens of variables, tight timelines, and shifting protocols. Without a structured approach, even minor lapses in data accuracy or documentation can compromise an entire study. This isn’t just about avoiding clerical mistakes; it's about preserving scientific integrity, auditability, and regulatory compliance.

Effective data management starts the moment the first observation is made—not at the point of analysis. Whether it’s biospecimen tracking, patient survey logging, or EDC platform entry, consistent data handling practices reduce risk and increase reproducibility. For research assistants aiming to excel, mastering both collection and daily data workflow execution is no longer optional. It’s foundational. And as trials move toward decentralized and digital-first formats, the demand for data fluency is outpacing traditional training. This guide will walk you through actionable frameworks, toolkits, workflows, and pitfalls to avoid—making you not only faster but error-resistant. Let’s get tactical.

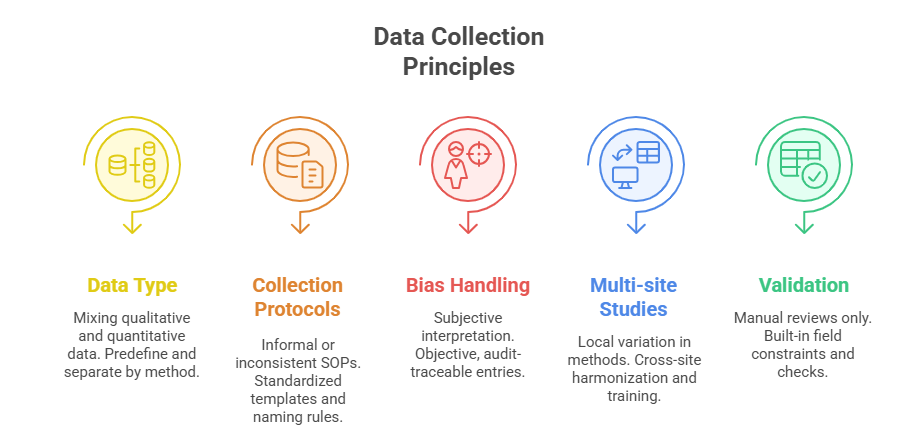

Core Principles of Data Collection in Research

Quantitative vs Qualitative Methods

Research assistants must first understand which data type aligns with the study’s objective—quantitative or qualitative—because each demands a different handling protocol. Quantitative data, like vital signs or lab results, rely on structured formats: timestamps, units, numeric validation. These need tight consistency checks during input to avoid skewed results. On the other hand, qualitative data—interviews, open-ended responses, observations—demand depth over volume. Here, contextual integrity matters more than formatting, and transcription accuracy becomes critical.

Choosing the wrong approach—or mixing methods without clarity—creates downstream confusion. Mixed-methods studies require predefined integration strategies. Without them, assistants risk collecting incompatible or non-actionable data that cannot be statistically or thematically synthesized.

Standardizing Collection Protocols

One of the fastest ways to reduce error is establishing and enforcing standard operating procedures (SOPs) for each collection method. Every field entry should be backed by a written rule: format for date entry, units of measurement, rounding logic, or how missing values are coded (e.g., NA vs 0).

Templates, pre-validated data sheets, and consistent tool usage (like REDCap or Castor) create audit trails and reduce ambiguity. Assistants should always aim to mirror case report form (CRF) logic across tools, even in exploratory phases. Teams should also agree upfront on protocol deviations—how to flag them, how to annotate them, and when escalation is needed.

When working across multi-site studies, cross-location alignment on protocols is non-negotiable. Even a minor deviation in one center—such as a different scale used to measure pain—can invalidate comparative results.

Avoiding Bias and Errors

Bias creeps into data when assistants assume instead of document. A missing field should be noted, not guessed. If a patient skips a question, it must be logged with a flag—not filled in based on assumption. Observer bias is equally dangerous: describing what a subject “likely meant” instead of quoting them exactly corrupts source data.

To reduce bias:

Train assistants on neutral language and double-entry validation

Cross-check entries with another team member weekly

Use data dictionaries with locked variable ranges

Log observational context without editorializing

Another overlooked risk is collection fatigue. When a study spans weeks or months, assistants may unconsciously relax data standards. Regular micro-audits, protocol refreshers, and random data sampling are essential to maintain consistency across time and team members.

Choosing the Right Tools & Platforms

Electronic Data Capture (EDC) Systems

Electronic Data Capture (EDC) systems are the central nervous system of modern research workflows. Platforms like REDCap, Medrio, and Castor allow research assistants to input, track, and clean data in real time. Unlike spreadsheets, EDCs apply built-in validation checks, audit trails, and form logic, which prevents incomplete or invalid submissions. For multi-arm studies or high-volume trials, EDCs reduce time spent on manual corrections by over 60%, according to clinical informatics benchmarks.

The best systems offer:

Role-based permissions to ensure data access aligns with study roles

Branching logic to eliminate irrelevant fields

Automated discrepancy reporting

Assistants trained in configuring EDC forms are more efficient and less prone to protocol violations—a valuable asset in fast-paced environments.

Cloud-Based Storage Tools

Storing research data on local devices or outdated servers creates security gaps and collaboration bottlenecks. Cloud-based platforms like Google Drive (with institutional security layers), Microsoft OneDrive, or Box allow synchronous team access, version history, and device-agnostic login. For assistants managing site data, being able to upload source docs in real time prevents backlog buildup.

However, all files must follow naming conventions and controlled folder structures, or chaos quickly unfolds. Cloud doesn’t mean disorganized—it demands greater discipline. Assistants should:

Create study-specific root folders

Set access restrictions by document type

Tag files by visit number, date, and participant ID

This ensures any auditor—or remote PI—can find the exact file they need without assistant intervention.

HIPAA-Compliant Platforms

When dealing with Protected Health Information (PHI), assistants must go beyond convenience and prioritize platforms that meet HIPAA, GDPR, and 21 CFR Part 11 standards. Slack, Zoom, or Dropbox may not meet compliance thresholds out of the box. Instead, assistants should be trained to use certified environments such as:

REDCap Cloud

Citrix ShareFile (configured for healthcare)

Veeva Vault

HIPAA compliance also involves data transmission methods. Uploading patient reports via personal email or public Wi-Fi—even with good intentions—can trigger violations. Every data move must follow encrypted channels, and personal devices should be avoided unless part of an approved Mobile Device Management (MDM) program.

| Tool Type | Risk Without It | Research Benefit |

|---|---|---|

| EDC Systems | Manual errors, version chaos | Audit trails, validation, logic branching |

| Cloud Storage | Local file loss, disorganization | Versioning, universal access, speed |

| HIPAA-Compliant Tools | PHI exposure, regulatory fines | Encrypted handling, role-based access |

| Platform Integration | Fragmented data silos | Real-time sync, seamless collaboration |

| Access Control | Oversharing, role confusion | Tiered permissions, user logs |

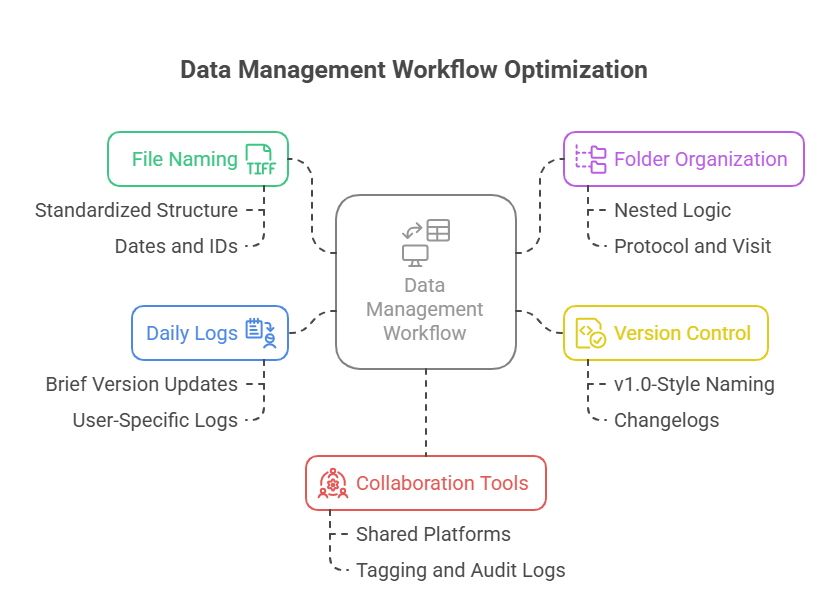

Daily Data Management Workflows

Naming Conventions & File Organization

Disorganized files are a hidden liability. A misnamed source doc or incorrectly filed consent form can derail timelines, confuse teams, or fail audits. Assistants should use clear, consistent naming conventions from day one. For example:

SiteID_ParticipantID_Visit#_DocumentType_Date

e.g., 102_003_V2_InformedConsent_2025-07-01.pdf

Folders must be structured with logic that reflects study architecture. Consider:

A main directory for each protocol

Subfolders by visit, participant, or document type

Use of underscore (_) instead of spaces to avoid system errors

Consistency makes retrieval instantaneous and prevents duplication, misplacement, and compliance failures.

Logging & Version Tracking

Without version control, even small updates become dangerous. A protocol amendment or revised CRF must be logged and tracked. Assistants should:

Use version numbers (v1.0, v1.1, etc.) in filenames

Maintain a changelog in a central location (Excel or Notion)

Archive outdated versions clearly marked as obsolete

This process protects the study from accidental use of outdated documents and supports regulatory traceability, which is critical during FDA or EMA inspections.

If multiple assistants touch the same files, a brief end-of-day version sync log ensures no overwrites or data loss occur. This habit is especially vital during data lock or interim analysis periods.

Real-Time Collaboration Tools

As remote trials rise, real-time collaboration is no longer a bonus—it’s a core workflow necessity. Tools like Microsoft Teams, Notion, or Airtable allow simultaneous editing, live status updates, and tag-based assignments. When properly configured, these tools:

Reduce lag between data collection and monitoring

Provide study-wide visibility into task completion

Enable CRAs to leave inline queries or comments instantly

Assistants must learn to use tagging, permission locks, and audit logs to track who changed what—and when. This level of transparency keeps protocol deviations in check and accelerates query resolution timelines.

Data Security, Privacy, and Compliance

Informed Consent & Access Levels

Data privacy begins before collection—with the informed consent process and access tiering. Research assistants must ensure that participants fully understand how their data will be used, stored, and shared. Generic language or rushed explanations open the door to legal and ethical risk. Consent documents must clearly state:

What data will be collected

Who will have access

Duration of storage and retention

Once collected, assistants should enforce access control based on study roles. Not every team member should see PHI or source documents. Set user-specific permissions across platforms—read-only, edit, or admin—to minimize exposure.

Access logs should be regularly reviewed. Suspicious patterns—like after-hours document downloads or access from unauthorized devices—must be flagged for escalation.

Encryption Standards

Encryption is not optional; it’s the baseline expectation for clinical-grade data management. All sensitive data—whether stored locally, on cloud platforms, or in transit—must be encrypted using industry-standard protocols. The minimum standard should be AES-256 for data at rest, and TLS 1.2+ for data in motion.

Assistants must confirm whether their platforms:

Offer end-to-end encryption

Allow encrypted backups

Prevent unauthorized export of files

Never send raw data or spreadsheets through unsecured channels like personal email. Instead, use encrypted portals or institutional file-sharing tools with two-factor authentication. Encryption isn’t just about tech—it’s about the discipline of using it consistently.

Regulatory Frameworks (GCP, GDPR, HIPAA)

Understanding regulations isn’t just a compliance checkbox—it’s the map for how assistants handle every stage of the data lifecycle. Three major frameworks apply:

Good Clinical Practice (GCP): Requires that data is attributable, legible, contemporaneous, original, and accurate (ALCOA). Assistants should always log who collected data, when, and how.

General Data Protection Regulation (GDPR): Applies to any study involving EU participants, requiring clear consent for each type of data use. Also enforces the right to erasure and strict breach protocols.

Health Insurance Portability and Accountability Act (HIPAA): Protects all health-related data in U.S. studies. Violations—even unintentional—can result in fines exceeding $50,000 per incident.

Assistants must internalize these rules. If unsure, they should escalate rather than improvise. Compliance isn't just legal protection—it's scientific credibility insurance.

| Security Component | Risk When Ignored | Correct Action |

|---|---|---|

| Informed Consent | Legal exposure, revocation | Clear, specific, and versioned forms |

| Access Levels | Oversharing PHI | Role-restricted user access |

| Encryption | Breach, data leaks | AES-256 and TLS protocols used |

| Regulations | Protocol violations | Align with HIPAA, GCP, GDPR |

| Data Transmission | Email leaks, unauthorized access | Use encrypted portals only |

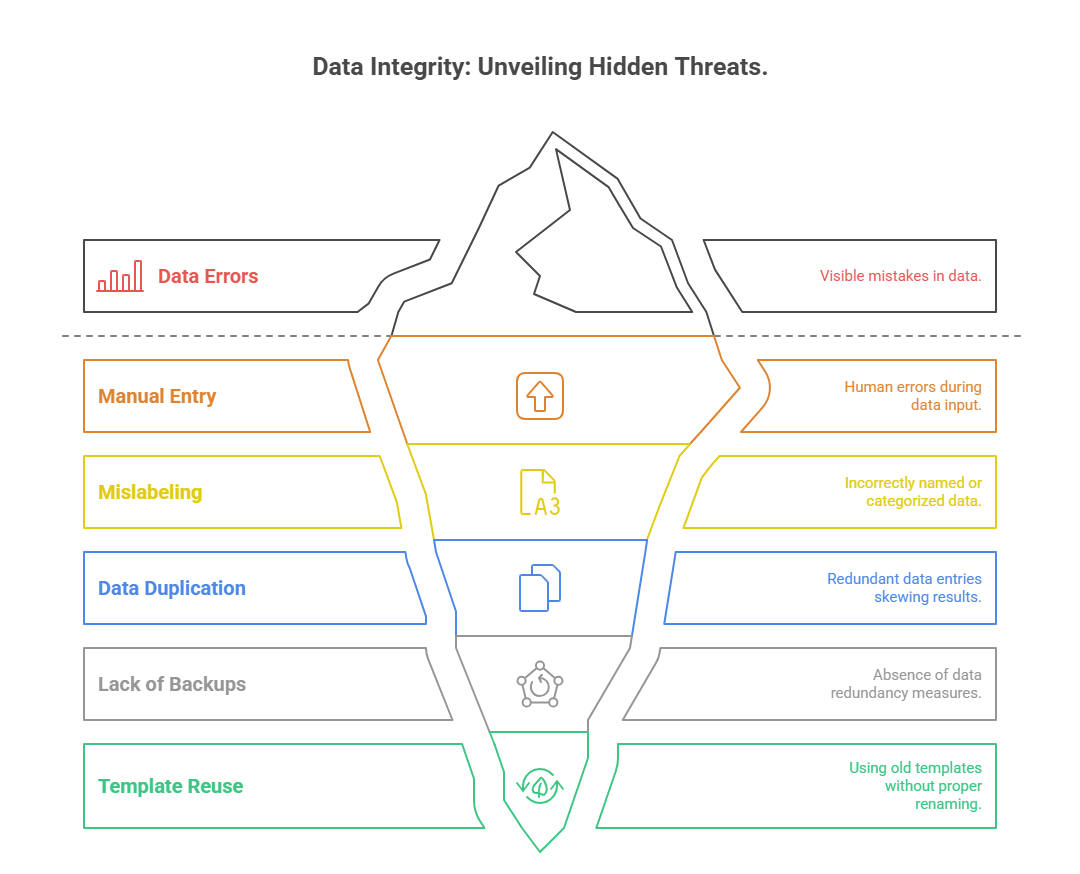

Common Mistakes Research Assistants Must Avoid

Manual Entry Pitfalls

Manual data entry is still common in early-phase trials or smaller academic studies, but it introduces avoidable error at every step. Typing speed doesn’t equal accuracy—fatigue, environmental distractions, and unvalidated fields all contribute to mistakes. Even a 1-digit error in lab values can misclassify adverse events or eligibility.

Assistants should avoid:

Copying handwritten notes directly without verification

Entering data without timestamps or source linkage

Skipping double-checks for numeric inputs

Where EDCs are unavailable, assistants should still use Excel with dropdown validations, color-coded alerts, and entry logs. Without safeguards, data becomes unreliable, and rework becomes inevitable.

Mislabeling or Data Duplication

Mislabeling affects both electronic and paper-based workflows. A mislabeled consent form or data file can lead to audit red flags or subject misidentification—a catastrophic breach in regulated environments. This usually happens when:

Shortcuts are taken during file saving

Templates are reused without renaming

Spreadsheets are duplicated and modified without new IDs

Every entry should be uniquely traceable. Assistants must audit filenames and participant codes weekly. Tools like conditional formatting or document management systems can help flag duplicates or misfiles automatically.

Data duplication, especially across study arms or visit timepoints, leads to false positives or exaggerated patterns. Assistants should implement checksum tools, version control, or automated deduplication checks to avoid this entirely.

Lack of Backups

One of the most overlooked errors is failing to create redundant backups. Devices crash. Shared folders get wiped. Assistants should maintain at least:

One cloud-based backup

One institutional network drive copy

One encrypted offline version (weekly or biweekly)

Backup logs should include the date, responsible person, and checksum verification when possible. Skipping this practice not only wastes time but can compromise months of study progress if files are corrupted or lost.

Many research assistants learn these mistakes the hard way—after a data loss, not before it. Institutionalize these workflows early, and recovery will never become your main strategy.

How ACRACC Certification Boosts Data Management Skills

The Advanced Clinical Research Assistant Certification (ACRACC) offered by CCRPS directly addresses the data challenges assistants face in modern clinical environments. This certification isn’t just theory—it’s grounded in the real workflows, tools, and regulatory protocols assistants must navigate from day one.

Participants are trained in:

Electronic Data Capture (EDC) systems setup and logic building

Cloud-based document handling using audit-ready naming conventions

Logging, version control, and regulatory-aligned data workflows (HIPAA, GCP, GDPR)

Unlike basic certifications, ACRACC emphasizes advanced skills such as query resolution, discrepancy logging, and real-time collaboration across decentralized sites. This allows assistants to not only enter data—but also manage, audit, and troubleshoot it.

Graduates leave with:

Confidence in managing large datasets

Familiarity with encrypted platforms and compliance expectations

Templates, SOPs, and toolkits they can immediately implement

For research assistants who want to move beyond clerical roles and into regulatory-compliant, audit-ready data stewardship, the ACRACC provides both the foundation and the future-facing edge.

Frequently Asked Questions

-

Raw data refers to the unprocessed information collected during a study—often from lab instruments, surveys, or direct observation. Source data, however, is the original record of those results, such as a lab report or signed case report form (CRF). Regulatory agencies like the FDA and EMA require that all study conclusions trace back to source data. For assistants, this means storing both raw entries and their source in an auditable, accessible format. Any changes to raw data must be justified, timestamped, and never overwrite the original. Maintaining this chain of integrity is critical for compliance, especially during audits and inspections.

-

At minimum, data backups should occur daily for active trials, especially those collecting high volumes of data. In academic or observational studies, backups every 2–3 days may suffice, but the standard is daily. Best practice includes three tiers of redundancy: cloud-based auto-sync, local secure drives, and weekly offline encrypted copies. Every backup must be logged with timestamps, filenames, and checksum confirmation where possible. Assistants should never rely on manual reminders—automated backup software or IT-managed scheduling ensures consistent protection without human error.

-

Avoiding bias starts with the mindset that the assistant is a recorder, not an interpreter. Use exact phrasing when documenting subject behavior or verbal responses. Avoid making assumptions about tone, intent, or meaning. Stick to observable facts—for example, write “subject crossed arms and did not respond for 30 seconds” rather than “subject appeared hostile.” Use structured observation templates when available, and double-enter or peer-review critical entries. Bias also arises when assistants anticipate outcomes, so it's crucial to remain blinded to hypotheses when possible and review training on neutral, objective data practices regularly.

-

Version tracking is best managed using document management systems like Notion, Confluence, or specialized research tools like MasterControl or Veeva Vault. These platforms allow time-stamped edits, changelogs, and role-based permissions. In smaller setups, assistants can version files manually using naming conventions (e.g., v1.0, v1.1, Final_v2). It’s essential to include a change summary either in a readme file or within the document metadata. Additionally, storing outdated versions in an "Archive" folder—rather than deleting them—ensures regulatory traceability and prevents accidental loss of information during review or re-submission.

-

In multilingual studies, data entry must follow centralized language standards to ensure cross-site consistency. Assistants should use validated translations for all participant-facing materials and input data into the EDC or spreadsheet using a single target language—typically English. If local-language text fields are allowed, metadata should tag the entry language to avoid confusion. It’s also best to train assistants on cultural context that might affect interpretation, especially in qualitative studies. Mistranslations can skew themes, so validation from native-speaking team members or certified translators is essential during transcription and coding stages.

-

All amendments must preserve the original entry and show a clear audit trail. In EDC platforms, this is done using built-in edit logs. In spreadsheets, it requires adding a new column or row for the corrected entry and referencing the original with a timestamp and initials. Never overwrite or delete original data—doing so breaks GCP and HIPAA compliance. Assistants should also log why the amendment occurred (e.g., “subject clarified date of symptom onset”). Any major corrections should be co-signed or reviewed by the PI or CRA, especially during locked phases or near interim analysis periods.

-

Securing data on mobile devices requires institutional Mobile Device Management (MDM) software, which enforces encryption, access controls, and remote wipe capabilities. Assistants should never store PHI or raw study data on personal devices unless explicitly authorized. Approved apps like REDCap Mobile or encrypted cloud platforms should be used exclusively. Devices must be password-protected, with biometric authentication if possible. Disable auto-sync to unsecured apps (e.g., iCloud, WhatsApp), and ensure file-sharing settings restrict downloads. Lost or stolen devices must be reported immediately. Physical and digital safeguards must work in tandem for full compliance.

-

Yes, but only if the assistant has been delegated responsibility through a signed delegation log and documented training. The study’s principal investigator (PI) or clinical research coordinator (CRC) must confirm the assistant is qualified to handle the specific data type—especially for source documents or electronic case report forms. Any collection must still follow SOPs, use approved forms, and comply with GCP and IRB protocols. Assistants should never improvise methods or deviate from protocol without PI approval. Even unsupervised, data collection must remain traceable, standardized, and legally defensible.

Final Thoughts

Precision in data handling is not just a technical requirement—it’s a core driver of research credibility. Every clinical trial, observational study, or academic investigation relies on error-free, reproducible data workflows to generate valid conclusions. For research assistants, the difference between good and great lies in daily discipline: naming files right, logging versions, choosing secure platforms, and minimizing bias at every step.

By mastering these processes early, assistants not only prevent future corrections but actively contribute to the integrity, speed, and success of the entire research team. In a field where small oversights can lead to major consequences, structured data practices are the fastest path to professional reliability and growth.